Configuring grid presets

A grid preset contains information needed for jobs to be handled by the CLC Server and submitted to the grid scheduling system. Multiple grid presets can be configured. Users specify the relevant grid preset when submitting a job (see Starting grid jobs). Each grid preset includes the location to deploy a CLC Grid Worker to. The CLC Grid Worker is used to run the CLC job on a grid node.

CLC Grid Worker version and deployment

Each time a grid preset is saved, all grid presets are (re)validated. This includes checking that the version of each CLC Grid Worker matches the CLC Server version installed on the master, and checking that all essential folders are present. If either of these checks fail, the CLC Grid Worker is redeployed. These checks are also carried out when the CLC Server is restarted.

When plugins are installed or removed from the master, the contents of each CLC Grid Worker's plugin installation directory are updated, ensuring the same plugins are present there as are present on the master.

Configuring grid presets

To configure a grid preset, go to:

Configuration (![]() ) | Job processing (

) | Job processing (![]() )

)

In the Grid setup section, under Grid Presets, click on the Add New Grid Preset... button.

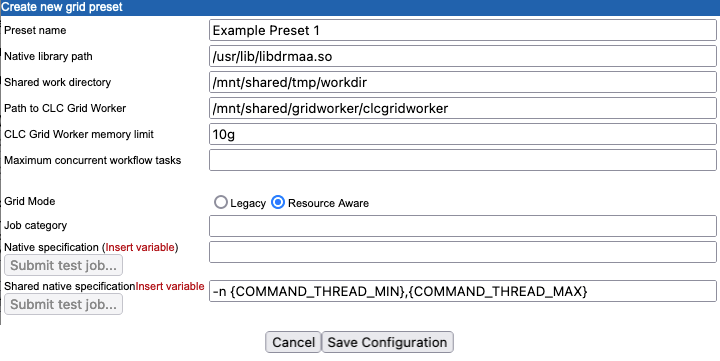

Figure 7.6: The grid preset configuration form. The Shared native specification field is only visible when the Resource Aware grid mode is selected.

- Preset name

- The preset name will be specified by users when submitting a job to the grid submitting a job to the grid.

Preset names can contain alphanumeric characters and hyphens. Hyphens cannot be used at the start of preset names.

Note: Changing an existing grid preset's name, in effect, creates a new preset. Any access restrictions that have been applied using the old name must be reconfigured for the new preset.

- Native library path

- The full path to the grid-specific DRMAA library.

- Shared work directory

- The path to a directory that can be accessed by both the CLC Server and the Grid Workers. Temporary directories are created within this area during each job run to hold files used for communication between the CLC Server and Grid Worker.

- Path to CLC Grid Worker

- The path to a directory on a shared file system that is readable from all execution hosts. If this directory does not exist, it will be created.

A CLC Grid Worker includes a JRE matching the CLC Server JRE, associated settings files and essential folders (e.g.

clcgridworker,grid_cpa,jre). It is extracted from the installation area of the CLC Server software and is deployed to the specified location when the grid preset is saved.Multiple grid presets can refer to the same grid worker. Defining different grid worker locations in different presets results in multiple grid workers being deployed.

- CLC Grid Worker memory limit

- The maximum amount of memory the CLC Grid Worker java process should use. If left blank, the default memory limit is used.

If providing a value in this field, provide a number followed by a unit, 'g', 'm' or 'k' for gigabytes, megabytes or kilobytes respectively. For example, "10g" limits the CLC Grid Worker java process to a maximum of 10 GB of memory.

Leaving this field blank, i.e. using the default value, should suit most circumstances. See Understanding memory settings for details.

- Maximum concurrent workflow tasks

- The maximum number of concurrent jobs that can be run as part of a workflow execution on a given grid node. See Multi-job processing on grid for further details about this setting.

- Grid mode

- There are two grid modes for backwards compatibility reasons. The "Resource Aware" mode is generally recommended. Choosing this mode allows jobs that require few resources to run concurrently on a given node. For this, the field Shared native specification must also be configured. This is described further below. If "Legacy mode" is selected, all jobs submitted to the grid from the CLC Server will request use of the entire node. "Legacy Mode" is the default, but this may change in the future.

- Job category

- The name of the job category - a parameter passed to the underlying grid system.

- Native specification and Shared native specification

- Parameters to be passed to the grid scheduler are specified here. For example, a specific grid queue or limits on numbers of cores.

The Native specification field contains the information to be passed to the grid scheduler for exclusive jobs, those where the whole execution node will be used.

The Shared native specification contains the information to be passed to the grid scheduler for jobs classified as non-exclusive. Such jobs can share the execution node with other jobs. This specification is only visible and configurable if the "Resource Aware" grid mode is selected.

Parameters entered in these fields generally match those given directly to the submission command. Please refer to the official documentation for the specific DRMAA library being used for details. Links to some DRMAA related documentation are provided in DRMAA libraries.

See Multi-job processing on grid for details about configuring concurrent job execution.

Adding variables for evaluation at runtime

Grid presets are essentially static in nature, with most options being defined directly in the preset itself. However, variables are available that will be evaluated at runtime if included in the specification. These variables, described below, can be entered in to the specification field by:

- Clicking on the

Insert variablelink and choosing the variable to insert. The selected variable, with the required syntax, will be added where the cursor is positioned within the specification field. - Typing the variable name within a pair of curly brackets directly into the specification field.

Note: The parameters in the descriptions below are for illustrative purposes only. They do not reflect available options for any particular grid scheduler.

- USER_NAME

- The name of the user who submitted the job.

This variable could be added to log usage statistics, or to send an email to an address that includes the contents of this variable, for example:

--email-address {USER_NAME}@yourmailserver.com - COMMAND_NAME

- The name of the CLC Server command to be executed on the grid by the clcgridworker executable.

For example, if a queues existed for the execution of certain commands, and those queues had names corresponding to the command names, the command name (and thus the relevant queue name) could be included in the native specification using something like the following:

--queue {COMMAND_NAME} - COMMAND_ID

- The ID of the CLC Server command to be executed on the grid.

- COMMAND_THREAD_MIN

- A value passed by non-exclusive jobs indicating the minimum number of threads required to run the command being submitted. This variable is only valid for Shared native specifications.

- COMMAND_THREAD_MAX

- A value passed by non-exclusive jobs indicating the maximum number of threads supported by the command being submitted. This variable is only valid for Shared native specifications.

Using functions in native specifications

Two functions can be used in native specifications take_lower_of and take_higher_of. These are invoked with the syntax: {#function arg1, arg2, [... argn]}:

These functions are anticipated to be primarily of use in Shared native specifications when limiting the number of threads or cores that could be used by a non-exclusive job, and where the grid system requires a fixed number to be specified, rather than a range.

- take_lower_of

- Evaluates to the lowest integer value of its argument.

- take_higher_of

- Evaluates to the highest integer-value of its argument.

In both cases, the allowable arguments are integers or variable names. If an argument provided is a string that is not a variable name, or if the variable expands to a non-integer, the argument is ignored. For instance {#take_lower_of 8,4,FOO} evaluates to 4 and ignores the non-integer, non-variable "FOO" string. Similarly, {#take_higher_of 8,4,FOO} evaluates to 8 and ignores the non-integer, non-variable "FOO" string.

An example of use of the take_lower_of function in the context of running concurrent jobs on a given grid node is provided in Multi-job processing on grid.

SLURM-specific native specification examples

For illustrative purposes, below are examples of SLURM-specific arguments that could be provided in the Native specification field of a grid preset.

Example 1: To submit to the queue/partition highpriority and specify the account for the job as the username of the user logged into the CLC Server:

-p highpriority -A {USER_NAME}

The above specification would have the same effect as the following sbatch command:

sbatch -p highpriority -A name_of_user my_script

Example 2: To redirect standard output and standard error outputs:

-o <path/to/standard_out> -e <path/to/error_out>

The above specification would have the same effect as the following sbatch command:

sbatch -o <path/to/standard_out> -e <path/to/error_out> my_script