Hierarchical clustering of features

A hierarchical clustering of features is a tree presentation of the similarity in expression profiles of the features over a set of samples (or groups).

The tree structure is generated by

- letting each feature be a cluster

- calculating pairwise distances between all clusters

- joining the two closest clusters into one new cluster

- iterating 2-3 until there is only one cluster left (which will contain all samples).

To start the clustering of features:

Toolbox | Expression Analysis (![]() )| Feature Clustering (

)| Feature Clustering (![]() ) | Hierarchical Clustering of Features (

) | Hierarchical Clustering of Features (![]() )

)

Select at least two samples ( (![]() ) or (

) or (![]() )) or an experiment (

)) or an experiment (![]() ).

).

Note! If your data contains many features, the clustering will take very long time and could make your computer unresponsive. It is recommended to perform this analysis on a subset of the data (which also makes it easier to make sense of the clustering. Typically, you will want to filter away the features that are thought to represent only noise, e.g. those with mostly low values, or with little difference between the samples). See how to create a sub-experiment in Creating sub-experiment from selection.

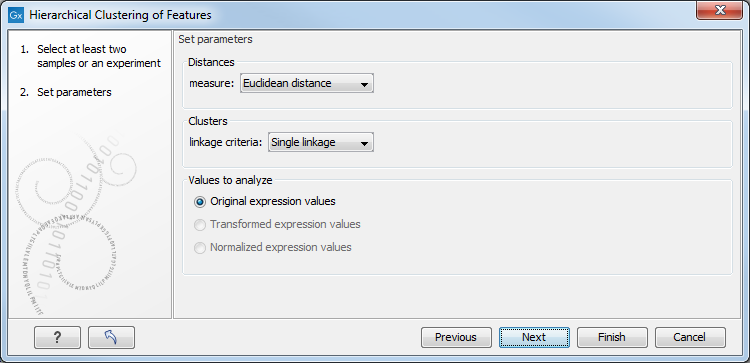

Clicking Next will display a dialog as shown in figure 23.39. The hierarchical clustering algorithm requires that you specify a distance measure and a cluster linkage. The distance measure is used specify how distances between two features should be calculated. The cluster linkage specifies how you want the distance between two clusters, each consisting of a number of features, to be calculated.

Figure 23.39: Parameters for hierarchical clustering of features.

There are three kinds of Distance measures:

- Euclidean distance. The ordinary distance between two points - the length of the segment connecting them. If

and

and

,

then the Euclidean distance between

,

then the Euclidean distance between  and

and  is

is

- 1 - Pearson correlation. The Pearson correlation coefficient between two elements

and

and

is defined as

where

is defined as

where

is the average of values in

is the average of values in  and

and  is the sample standard deviation of these values.

It takes a value

is the sample standard deviation of these values.

It takes a value

![$ \in [-1,1]$](img117.gif) . Highly correlated elements have a high absolute value of the Pearson correlation, and elements whose values are un-informative about each other have Pearson correlation 0. Using

. Highly correlated elements have a high absolute value of the Pearson correlation, and elements whose values are un-informative about each other have Pearson correlation 0. Using

as distance measure means that elements that are highly correlated will have a short distance between them, and elements that have low correlation will be more distant from each other.

as distance measure means that elements that are highly correlated will have a short distance between them, and elements that have low correlation will be more distant from each other.

- Manhattan distance. The Manhattan distance between two points is the distance measured along axes at right angles. If

and

and

,

then the Manhattan distance between

,

then the Manhattan distance between  and

and  is

is

The possible cluster linkages are:

- Single linkage. The distance between two clusters is computed as the distance between the two closest elements in the two clusters.

- Average linkage. The distance between two clusters is computed as the average distance between objects from the first cluster and

objects from the second cluster. The averaging is performed over all pairs

, where

, where  is an object from the first cluster and

is an object from the first cluster and  is an object

from the second cluster.

is an object

from the second cluster.

- Complete linkage. The distance between two clusters is computed as the maximal object-to-object distance

, where

, where  comes from the first cluster,

and

comes from the first cluster,

and  comes from the second cluster. In other words, the distance between two clusters is computed as the distance between the two farthest objects in the two clusters.

comes from the second cluster. In other words, the distance between two clusters is computed as the distance between the two farthest objects in the two clusters.

At the bottom, you can select which values to cluster (see Selecting transformed and normalized values for analysis).

Click Finish to start the tool.

Subsections